2018 could be the year when the U.S. takes back the lead over China with the most powerful supercomputer in the world. It could be the year when the AI-power war over computing power started.

2017 is the year when Artificial Intelligence started creating Artificial Intelligence (AI). It is the year when China overtook the US in the total number of ranked supercomputers and in aggregate computing performance.

All these events are deeply shaping our future … and our present. They are recasting how wars will be and are already waged, while strategy and, indeed, the scope of national security, become considerably extended. They are intimately connected. Understanding why and how is crucial to foresee what is likely to happen and is already happening, and fathom how the emerging AI-world will look like.

This article and following, using concrete examples and cases, will explain how and why AI and computing power are related. They will thus focus on hardware and computing power as a driver, force and stake for AI’s development, in the Deep Learning (DL) AI-subfield. Previously, we identified computing power as one of the six drivers that not only act as forces behind the expansion of AI but also, as such, become stakes in the competition among actors in the race for AI-power (Helene Lavoix, “Artificial Intelligence – Forces, Drivers and Stakes” The Red Team Analysis Society, 26 March 2018). We looked in detail at the first driver with “Big Data, Driver for Artificial Intelligence… but Not in the Future?” (Helene Lavoix, The Red Team Analysis Society, 16 April 2018)

Here we shall start with latest – and most striking – cases exemplifying the tight relationship that exists between hardware and its computing power and the current exponential development of AI, or more exactly the expansion of DL. Computing power and AI-DL are actually co-evolving. We present two cases of AI-DL creating Neural Nets architectures thus DL: Google’s AutoML project, as well as its impact for example in terms of computer vision applications, and U.S. Department of Energy’s Oak Ridge National Laboratory (ORNL) MENNDL (Multinode Evolutionary Neural Networks for Deep Learning), and relate them to the computing power needed. We thus start identifying crucial elements of computing power needed for AI-DL, stress the potential for evolutionary algorithms, as well as point out that we may now be one step closer to an Artificial General Intelligence.

We shall dig deeper in this coevolution with the next articles, understanding better how and why DL and computing power/hardware are related. We shall look more in detail at the impact of this relationship and its coevolution in terms of hardware. We shall thus outline the quickly evolving state of play, and identify further areas that need to be monitored. Meanwhile, as much as possible, we shall explain the technical jargon from TFLOPS to CPU, NPU or TPU, which is not immediately understandable to non-AI and non-IT scientists and specialists, and, yet, which now needs to be understood by political and geopolitical analysts and concerned people. This deep-dive will allow us to understand better the corresponding dynamics emerging from the new AI-world that is being created.

Indeed, we shall explain how the American seven-year ban on Chinese telecommunication company ZTE and other related American actions must be understood as a strategic move in what is part of the AI-power race – i.e. the race for relative power status in the new international distribution of power in the making – and looks increasingly like the first battle of a war for AI-supremacy, as suggested by the U.S. tightening actions against Chinese Huawei (e.g. Li Tao, Celia Chen, Bien Perez, “ZTE may be too big to fail, as it remains the thin end of the wedge in China’s global tech ambition“, SCMP, 21 April 2018; Koh Gui Qing, “Exclusive – U.S. considers tightening grip on China ties to Corporate America“, Reuters, 27 April 2018).

As we foresaw, AI has already started redrawing geopolitics and the international world (see “Artificial Intelligence and Deep Learning – The New AI-World in the Making” and “When Artificial Intelligence will Power Geopolitics – Presenting AI”).

Starting to envision AI creating AIs

Google’s AutoML, the birth of NASNet and of AmoebaNets

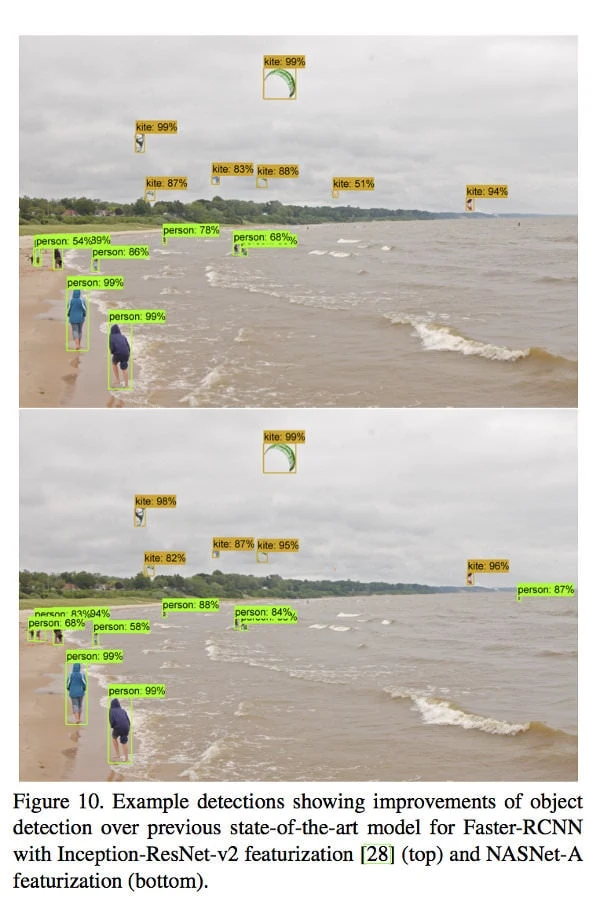

In May 2017, Google Brain Team – one of Google’s research lab – announced it had launched “an approach” called AutoML which aimed at “exploring ways to automate the design of machine learning models”, using notably evolutionary algorithms and Reinforcement Learning (RL) algorithms, i.e. one aspect of DL and thus AI (Quoc Le & Barret Zoph, “Using Machine Learning to Explore Neural Network Architecture“, Google Research Blog, 17 May 2017). They first successfully applied the approach for image recognition and language modeling but with small datasets: “our approach can design models that achieve accuracies on par with state-of-art models designed by machine learning experts (including some on our own team!).” (Ibid.) Then, they tested AutoML for large datasets “such as ImageNet image classification and COCO object detection” (Barret Zoph, Vijay Vasudevan, Jonathon Shlens and Quoc Le, “AutoML for large scale image classification and object detection, Google Research Blog, 2 Nov 2017). As a result, NASNet was born, in various size, which, for image recognition achieved higher accuracy and lower computational costs than other architectures (see Google’s figure on the right hand side). For example, “The large NASNet achieves state-of-the-art accuracy while halving the computational cost of the best reported result on arxiv.org (i.e., SENet)”. [5] The results for object detection were also better than for other architectures (Ibid.).

As pointed out by Google’s scientists, NASNet may thus tremendously improve computer vision applications (Ibid.). Considering the importance of computer vision for robotics in general, for Lethal Autonomous Weapon System (LAWS) in particular, being able to use NASNet and NASNet types of architecture, and even more to create better programs may become crucial. Google “open-sourced NASNet for inference on image classification and for object detection” (Ibid.), which should limit – to a point considering the need to also use Google’s platform TensorFlow machine learning framework, as well as the starting war between the US and China over AI (forthcoming) – the possible use of NASNet by one actor and not another. This example, shows that being able to develop and run an “AI that creates AI”, which are better than human-designed AI architectures may prove crucial for the started AI-power race, including in terms of possible future warfare, as well as for AI-governance.

A GPU is a Graphics Processing Unit. It was launched as such by NVIDIA in 1999 and is considered as the crucial component that allowed for the take-off of DL.

A CPU is a Central Processing Unit. It was the norm notably before the advent of GPU and the expansion of DL-AI.

Both microprocessors are built with different architectures with different objectives and functions, e.g. Kevin Krewell, “What’s the Difference Between a CPU and a GPU?” NVIDIA blog, 2009.

If the resulting AI-architectures have “less computational cost” than human-designed ones, what is the computing power necessary for the creating AI? According to Google scientists, “the initial architecture search [utilised for AutoML] used 800 GPUs for 28 days resulting in 22,400 GPU-hours. The method in this paper [NASNet] uses 500 GPUs across 4 days resulting in 2,000 GPU-hours. The former effort used NVIDIA K40 GPUs, whereas the current efforts used faster NVIDIA P100s. Discounting the fact that we use faster hardware, we estimate that the current procedure is roughly about 7× more efficient (Barret Zoph, Vijay Vasudevan, Jonathon Shlens, Quoc V. Le, “Learning Transferable Architectures for Scalable Image Recognition“, Submitted on 21 Jul 2017 (v1), last revised 11 Apr 2018 (this version, v4), arXiv:1707.07012v4 [cs.CV] ).

Google Brain Team continues its AutoML efforts at finding thebest way(s) to develop AIs that create AI. Out of an experiment to compare the relative merits of using RL and evolution for architecture search to discover automatically image classifiers was born evolutionary algorithms AmoebaNets: “This is the first time evolutionary algorithms produce state-of-the- art image classifiers” (Esteban Real, Alok Aggarwal, Yanping Huang, Quoc V Le, “Regularized Evolution for Image Classifier Architecture Search“, Submitted on 5 Feb 2018 (v1), last revised 1 Mar 2018 (this version, v3) arXiv:1802.01548v3 [cs.NE]).

A TPU is a Tensor Processing Unit, the Application Specific Integrated Circuit created by Google for AI purposes and launched in May 2016

However, as the scientists point out, “All these experiments took a lot of computation — we used hundreds of GPUs/TPUs for days” (Esteban Real, “Using Evolutionary AutoML to Discover Neural Network Architectures“, Google Research Blog, 15 March 2018). The dedicated evolution experiment “ran on 900 TPUv2 chips for 5 days and trained 27k models total”, while each large-scale experiment “ran on 450 GPUs for approximately 7 days” (Real et al, Ibid., pp 12 & 3).

Hence, computing power is crucial in this new search for DL systems creating other DL systems or AI systems creating AIs. If the result obtained can then reduce the computational cost, it is only because, initially, powerful hardware was available. Yet, however large Google’s computing power dedicated to AutoML, it is not – yet? – on a par with what may happen with supercomputers.

Fast AI-Creator … but only with a Supercomputer

MENNDL – Multinode Evolutionary Neural Networks for Deep Learning

On 28 November 2017, scientists at U.S. Department of Energy’s Oak Ridge National Laboratory (ORNL), announced they had developed an evolutionary algorithm “capable of generating custom neural networks” – i.e. artificial intelligence (AI) systems in its Deep Learning guise – “that match or exceed the performance of handcrafted artificial intelligence systems” for application of AI to scientific problems (Jonathan Hines for ORNL, “Scaling Deep Learning for Science“, ORNL, 28 November 2017). These new AI systems are produced in a couple of hours and not in a matter of months as if human beings were making them (Ibid.), or days and weeks as what Google achieved.

FLOPS means Floating Point Operations Per Second.

It is a measure of computer performance.A teraFLOPS (TFLOPS) represents one million million (1012) floating-point operations per second.

A petaFLOPS (PFLOPS) represents 1000 teraFLOPS (TFLOPS).

However, this feat is only possible because MENNDL (Multinode Evolutionary Neural Networks for Deep Learning) – the evolutionary algorithm that “is designed to evaluate, evolve, and optimize neural networks for unique datasets” – is used on ORNL’s Titan computer, a Cray XK7 system. This supercomputer was the most powerful in the world in 2012 (Top500 list, November 2017). In November 2017 it ranked ‘only’ number five, but was still the largest computer in the U.S. (Ibid.): “Its 17.59 petaflops are mainly the result of its NVIDIA K20x GPU accelerators” (Ibid.)

Now, the ORNL should get a new supercomputer, which should be online in late 2018, Summit, a “200-petaflops IBM AC922 supercomputer” (Katie Elyce Jones “Faces of Summit: Preparing to Launch”, ORNL, 1 May 2018). “Summit will deliver more than five times [five to ten times] the computational performance of Titan’s 18,688 nodes, using only approximately 4,600 nodes when it arrives in 2018.” (Summit and Summit FAQ). Each Summit node consists notably “of two IBM Power9 CPUs, six NVIDIA V100 GPUs” (Summit FAQ). This means that we have here the computing power of 9200 IBM Power9 CPUs and 27600 NVIDIA V100 GPUs. For the sake of comparison, Titan has 299,008 CPU Opteron Cores and 18,688 K20X Keplers GPU, i.e. 16 CPU and 1 GPU per node (Titan, ORNL) .

By comparison, China – and the world – most powerful computer, “Sunway TaihuLight, a system developed by China’s National Research Center of Parallel Computer Engineering & Technology (NRCPC), and installed at the National Supercomputing Center in Wuxi” delivers a performance of 93.01 petaflops (Top500 list, November 2017). It uses 10,649,600 Shenwei-64 CPU Cores (Jack Dongarra, “Report on the Sunway TaihuLight System”, www.netlib.org, June 2016: 14).

In terms of energy, “Titan demonstrated a typical instantaneous consumption of just under 5 million watts (5 Mega Watts or 5MW), or an average of 3.6 million kilowatt-hours per month (3.6MkW/h/m)” (Jeff Gary, “Titan proves to be more energy-efficient than its predecessor“, ORNL, 20 August 2014). The expected energy Peak Power Consumption for Summit is 15MW. Sunway TaihuLight would consume in energy 15,37MW (Top500 list, Sunway TaihuLight). Titan power efficiency is 2, 143 GFlops/watts and ranks as such 105, while Sunwai TaihuLight’s power efficiency is 6,051 GFlops/watts and ranks 20 (The Green500, Nov 2017).

Titan’s computing approaches the exascale, or a million trillion calculations a second (Titan), while Summit should deliver “exascale-level performance for deep learning problems—the equivalent of a billion billion calculations per second”, far improving MENNDL capabilities, while new approaches to AI will become possible (Hines, Ibid.).

The need for and use of immense computing power related to the new successful quest for creating AIs with AI is even clearer when looking at the ORNL MENNDL. Meanwhile, the possibilities that computing power and creating AIs yield together are immense.

At this stage, we wonder how China notably, but also other countries having stated their intention to strongly promote AI in general, AI-governance in particular, such as the U.A.E. (see U.A.E. AI Strategy 2031 – video) are faring in terms of AIs able to create AIs. May smaller countries compete with the U.S. and China in terms of computing power? What does that mean for their AI strategy?

With these two cases, we have identified that we need to add another type of DL, evolutionary algorithms, to the two upon which we have focused so far, i.e. Supervised Learning and Reinforcement Learning. We have also started delineating fundamental computing power elements such as types of processing units, time, number of calculations per seconds, and energy consumption. We shall detail further our understanding of these in relation to AI next.

Finally, considering these new AI systems, we must point out that their very activity, i.e. creation, is one of the elements human beings fear about AI (see Presenting AI). Indeed, creative power is usually vested only in God(s) and in living beings. It may also be seen as a way for the AI to reproduce. This fear we identified was meant to be mainly related to Artificial General Intelligence (AGI) – a probably distant goal according to scientists – and not to Narrow AI, of which DL is part (Ibid.). Yet, considering the new creative power of DL that is being unleashed, we may now wonder if we are not one step closer to AGI. In turn, this would also mean that computing power (as well as, of course algorithms as the two coevolve) is not only a driver for DL and narrow AI but also for AGI.

Featured image: Graphic regarding Summit the new supercomputer of the Department of Energy’s (DOE) Oak Ridge National Laboratory. Image cropped and merged with another, From Oak Ridge National Laboratory Flickr, Attribution 2.0 Generic (CC BY 2.0).